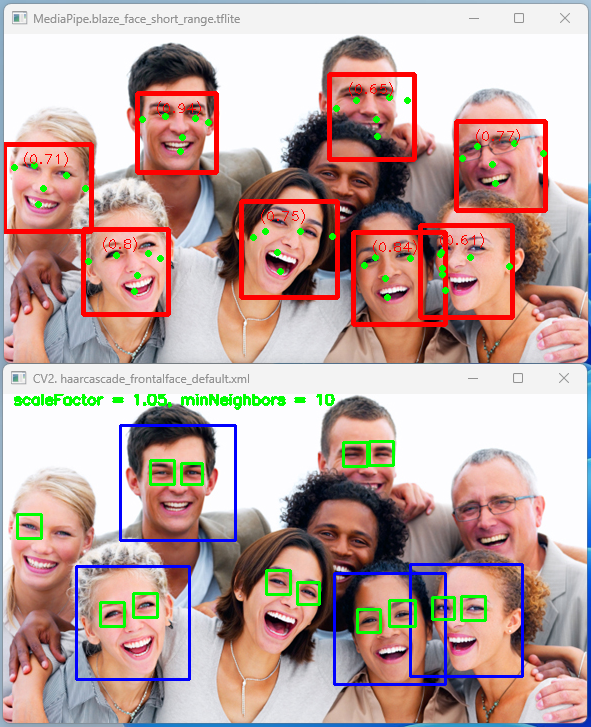

Once I did the face detection project, it made me easier to understand gesture recognition, you can do it too. It gives me more solid fundamentals to start with deep learning studying, I want to create my objection recognition model and have the drone fly with this. Before that, I like to try body detection and swap fly as my next project. OK, let’s see how we make this happen in our drone.

Basic Concept

- Capture video frame from the drone

- Use a hand detection tool to identify hands from the frame and identify the left hand.

- Based on the hand-detected information (including wrist, finger, knuckles & phalanges) and the logic, we will achieve gesture control.

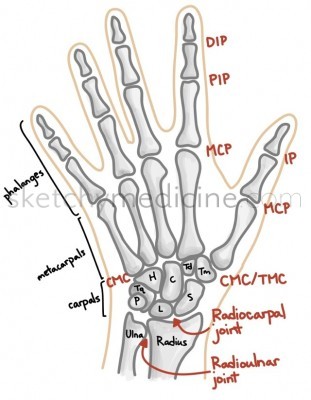

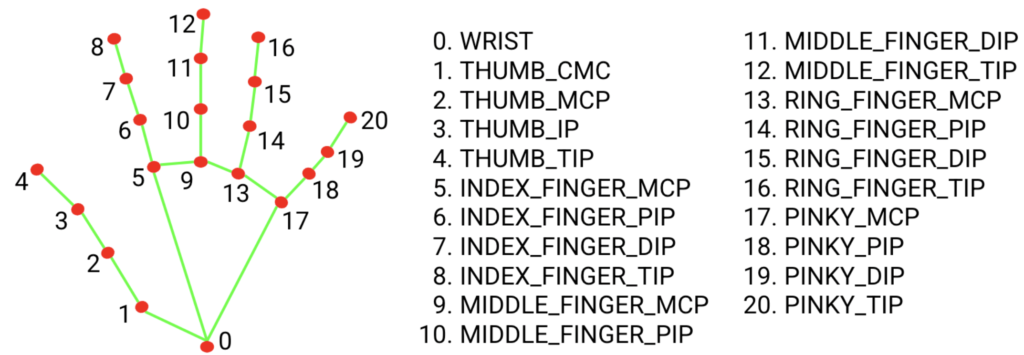

Understanding your hand

First of all, let’s understand our hands first… XD

I got this picture from sketchymedicine.com, it contains a lot of medical sketches and detailed information, very cool website.

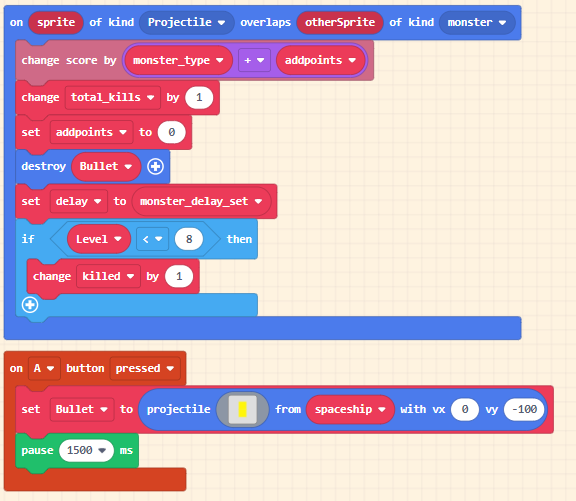

Program with MediaPipe

See below for the full program

from djitellopy import Tello

import cv2

import mediapipe as mp

import threading

import math

import logging

import time

# Assign tello to the Tello class and set the information to error only

tello = Tello()

tello.LOGGER.setLevel(logging.ERROR) #Ignore INFO from Tello

fly = False #For debuggin purpose

# Assign the MediaPipe hands detection solution to mpHands and define the confidence level

mpHands = mp.solutions.hands

hands = mpHands.Hands(min_detection_confidence=0.8, min_tracking_confidence=0.8)

# When we detect the hand, we can use mp.solution to plot the location and connection

mpDraw = mp.solutions.drawing_utils

def hand_detection(tello):

while True:

global gesture

# Read the frame from Tello

frame = tello.get_frame_read().frame

frame = cv2.flip(frame, 1)

# Call hands from MediaPipe Solution for the hand detction, need to ensure the frame is RGB

result = hands.process(frame)

# Read frame width & height instead of using fixed number 960 & 720

frame_height = frame.shape[0]

frame_width = frame.shape[1]

my_hand = []

if result.multi_hand_landmarks:

for handlms, handside in zip(result.multi_hand_landmarks, result.multi_handedness):

if handside.classification[0].label == 'Right': # We will skip the right hand information

continue

# With mp.solutions.drawing_utils, plot the landmark location and connect them with define style

mpDraw.draw_landmarks(frame, handlms, mpHands.HAND_CONNECTIONS,\

mp.solutions.drawing_styles.get_default_hand_landmarks_style(),\

mp.solutions.drawing_styles.get_default_hand_connections_style())

# Convert all the hand information from a ratio into actual position according to the frame size.

for i, landmark in enumerate(handlms.landmark):

x = int(landmark.x * frame_width)

y = int(landmark.y * frame_height)

my_hand.append((x, y))

# Capture all the landmarks position and distance into hand[]

# wrist = 0

# thumb = 1 - 4

# index = 5 - 8

# middle = 9 - 12

# ring = 13 - 16

# little = 17 - 20

# Setup left hand control with the pre-defined logic.

# Besides thumb, we use finger tip y position compare with knuckle y position as an indicator

# Thumb use the x position as the comparison.

# Stop, a fist

# Land, open hand

# Right, only thumb open

# Left, only little finger open

# Up, only index finger open

# Down, both thumb and index finger open

# Come, both index and middle fingger open

# Away, both index, middle and ring finger open

finger_on = []

if my_hand[4][0] > my_hand[2][0]:

finger_on.append(1)

else:

finger_on.append(0)

for i in range(1,5):

if my_hand[4 + i*4][1] < my_hand[2 + i*4][1]:

finger_on.append(1)

else:

finger_on.append(0)

gesture = 'Unknown'

if sum(finger_on) == 0:

gesture = 'Stop'

elif sum(finger_on) == 5:

gesture = 'Land'

elif sum(finger_on) == 1:

if finger_on[0] == 1:

gesture = 'Right'

elif finger_on[4] == 1:

gesture = 'Left'

elif finger_on[1] == 1:

gesture = 'Up'

elif sum(finger_on) == 2:

if finger_on[0] == finger_on[1] == 1:

gesture = 'Down'

elif finger_on[1] == finger_on[2] == 1:

gesture = 'Come'

elif sum(finger_on) == 3 and finger_on[1] == finger_on[2] == finger_on[3] == 1:

gesture = 'Away'

cv2.putText(frame, gesture, (10, 50), cv2.FONT_HERSHEY_SIMPLEX, 2, (255, 0, 0), 3)

frame = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

cv2.imshow('Tello Video Stream', frame)

cv2.waitKey(1)

if gesture == 'Landed':

break

########################

# Start of the program #

########################

# Connect to the drone via WIFI

tello.connect()

# Instrust Tello to start video stream and ensure first frame read

tello.streamon()

while True:

frame = tello.get_frame_read().frame

if frame is not None:

break

# Start the hand detection thread when the drone is flying

gesture = 'Unknown'

video_thread = threading.Thread(target=hand_detection, args=(tello,), daemon=True)

video_thread.start()

# Take off the drone

time.sleep(1)

if fly:

tello.takeoff()

tello.set_speed(10)

time.sleep(2)

tello.move_up(80)

while True:

hV = dV = vV = rV = 0

if gesture == 'Land':

break

elif gesture == 'Stop' or gesture == 'Unknown':

hV = dV = vV = rV = 0

elif gesture == 'Right':

hV = -15

elif gesture == 'Left':

hV = 15

elif gesture == 'Up':

vV = 20

elif gesture == 'Down':

vV = -20

elif gesture == 'Come':

dV = 15

elif gesture == 'Away':

dV = -15

tello.send_rc_control(hV, dV, vV, rV)

# Landing the drone

if fly: tello.land()

gesture = 'Landed'

# Stop the video stream

tello.streamoff()

# Show the battery level before ending the program

print("Battery :", tello.get_battery())

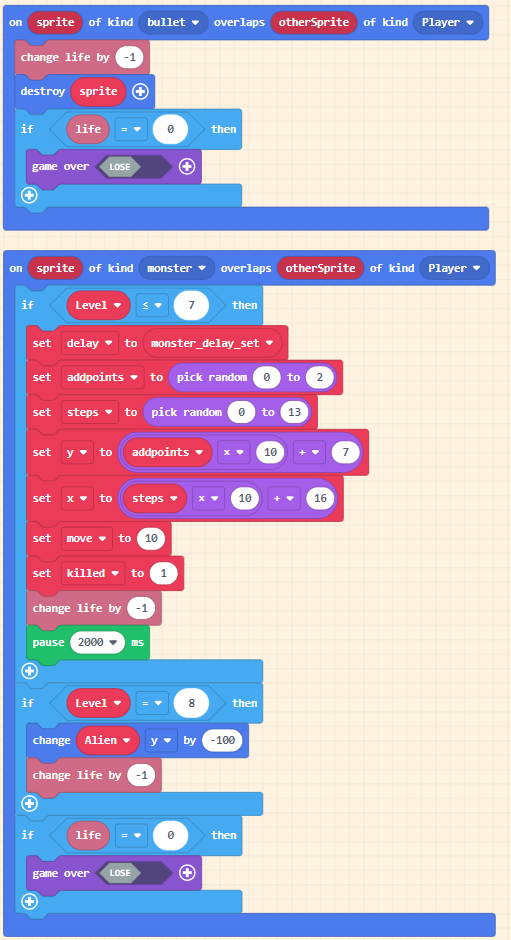

Hand Detection

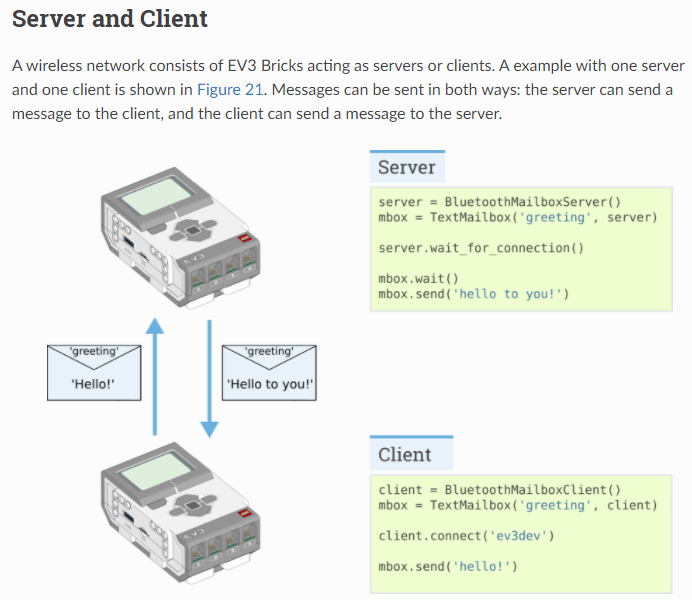

# Assign the MediaPipe hands detection solution to mpHands and define the confidence level

mpHands = mp.solutions.hands

hands = mpHands.Hands(min_detection_confidence=0.8, min_tracking_confidence=0.8)From MediaPipe, we are going to use MediaPipe Solution Hands for our hand detection, there are a few parameters that are important to us,

- min_hand_detection_confidence – that’s the first step to identifying a hand in the single frame. At which score of detection is considered a success, the lower the number, the more detected objects can pass but the higher the chance of error. The higher the number, need a very high the score object, i.e. a very clear and precise hand image.

- min_tracking_confidence – Once the hand is detected based on the min_hand_detection_confidence requirement, it will do the hand tracking with this number.

You can refer to the MediaPipe Official Website for more information. They also get a demonstration website for you to play with different models. However,

def __init__(self,

static_image_mode=False,

max_num_hands=2,

model_complexity=1,

min_detection_confidence=0.5,

min_tracking_confidence=0.5):I found that the documentation on the MediaPipe Official Website may not be updated, and min_hand_presence_confidence and running_mode no longer exist. What I checked from hands.py. It spent me 30 minutes playing with the demo and reading information to understand the difference between min_hand_detection_confidence and min_hand_presence_confidence.

gesture = 'Unknown'

video_thread = threading.Thread(target=hand_detection, args=(tello,), daemon=True)

video_thread.start() Just like what we did in face detection, we need to run the hand detection function in a thread (parallel processing) to capture and analyze the hand position, updating a global variable – gesture, so that the drone movement control can take corresponding actions according to this.

# Read the frame from Tello

frame = tello.get_frame_read().frame

frame = cv2.flip(frame, 1)

# Call hands from MediaPipe Solution for the hand detction, need to ensure the frame is RGB

result = hands.process(frame)Get the latest frame from the drone and flip it from the camera point of view to our point of view. Then, process the frame with hands, it was predefined in the beginning – line #11. Once the hand detection is done, the following information will be stored in result, it will contain,

- result.multi_handedness – ‘Left’ or ‘Right’ hand

- result.multi_hand_landmarks – an array containing 21 sets of data as show below, they call it landmarks, each landmark including the x, y & z position. But, the x and y coordinates are normalized to [0.0, 1.0] by the image width and height, respectively. The z coordinate represents the landmark depth, with the depth at the wrist being the origin.

- result.multi_hand_world_landmarks – The 21 hand landmarks are also presented in world coordinates. Each landmark is composed of

x,y, andz, representing real-world 3D coordinates in meters with the origin at the hand’s geometric center.

if result.multi_hand_landmarks:

for handlms, handside in zip(result.multi_hand_landmarks, result.multi_handedness):

if handside.classification[0].label == 'Right': # We will skip the right hand information

continueIf hand(s) are detected, the result will contain the necessary data. Since we only need to use the left hand to control the drone, we will skip reading the Right hand data.

mpDraw = mp.solutions.drawing_utils mpDraw.draw_landmarks(frame, handlms, mpHands.HAND_CONNECTIONS, mp.solutions.drawing_styles.get_default_hand_landmarks_style(), mp.solutions.drawing_styles.get_default_hand_connections_style()) Then, we use the drawing_utils from MediaPipe to highlight the landmarks and connect them.

# Convert all the hand information from a ratio into actual position according to the frame size.

for i, landmark in enumerate(handlms.landmark):

x = int(landmark.x * frame_width)

y = int(landmark.y * frame_height)

my_hand.append((x, y)) Retreves result.multi_hand_landmarks x & y data and converts this into actual position to the frame size. Store into array my_hand for the gesture analysis.

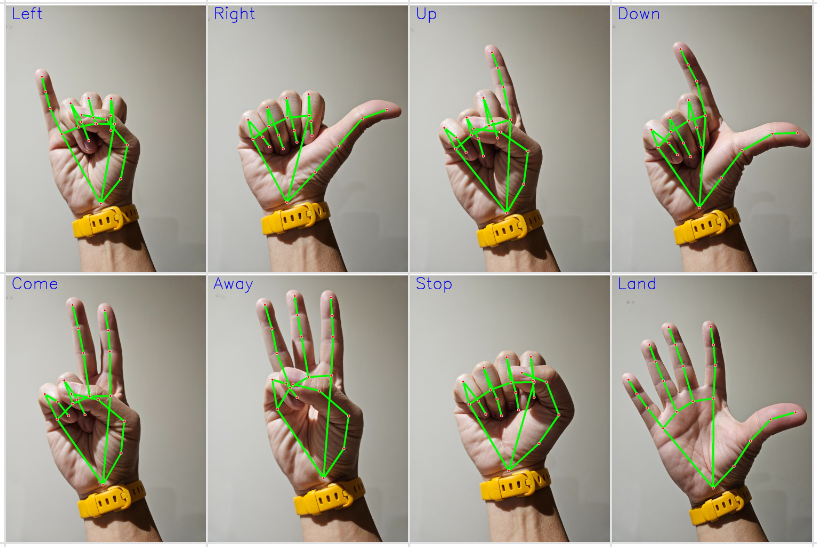

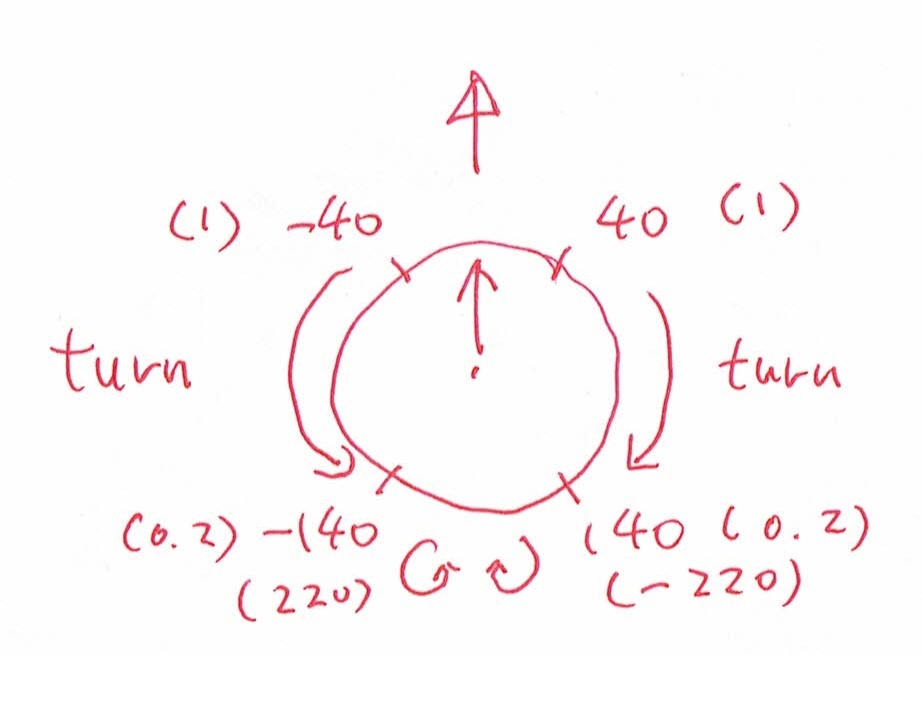

Logic for different gestures – Finger open or close?

We just simply compare the y position of the fingertip (TIP) to the knuckle (MCP). For the thumb, we compare the x position of the fingertip (TIP) to the knuckle (MCP) as shown below,

When thumb is open, finger tip x is bigger then knuckle x. When the fingers are open, finger tip y is smaller than knuckle y.

finger_on = []

if my_hand[4][1] > my_hand[2][1]:

finger_on.append(1)

else:

finger_on.append(0)

for i in range(1,5):

if my_hand[4 + i*4][3] > my_hand[2 + i*4][3]:

finger_on.append(1)

else:

finger_on.append(0)

gesture = 'Unknown'

if sum(finger_on) == 0:

gesture = 'Stop'

elif sum(finger_on) == 5:

gesture = 'land'

elif sum(finger_on) == 1:

if finger_on[0] == 1:

gesture = 'Right'

elif finger_on[4] == 1:

gesture = 'Left'

elif finger_on[1] == 1:

gesture = 'Up'

elif sum(finger_on) == 2:

if finger_on[0] == finger_on[1] == 1:

gesture = 'Down'

elif finger_on[1] == finger_on[2] == 1:

gesture = 'Come'

elif sum(finger_on) == 3 and finger_on[1] == finger_on[2] == finger_on[3] == 1:

gesture = 'Away'We compare each fingers one by one and record into array finger_on, 1 represent open and 0 represent to close. By mapping this to our target gesture,

- Left – finger_on = [0, 0, 0, 0, 1]

- Right – finger_on = [1, 0, 0, 0, 0]

- Up – finger_on = [0, 1, 0, 0, 0]

- Down – finger_on = [1, 1, 0, 0, 0]

- Come – finger_on = [0, 1, 1, 0, 0]

- Away – finger_on = [0, 1, 1, 1, 0]

- Stop – finger_on = [0, 0, 0, 0, 0]

- Land – finger_on = [1, 1, 1, 1, 1]

Then, we use if/then loops to determinate variable gesture according to the finger_on result.

It may be fun if we use binary numbers to represent the above gesture determination.

Drone Movement Control

while True:

hV = dV = vV = rV = 0

if gesture == 'Land':

break

elif gesture == 'Stop' or gesture == 'Unknown':

hV = dV = vV = rV = 0

elif gesture == 'Right':

hV = -15

elif gesture == 'Left':

hV = 15

elif gesture == 'Up':

vV = 20

elif gesture == 'Down':

vV = -20

elif gesture == 'Come':

dV = 15

elif gesture == 'Away':

dV = -15

tello.send_rc_control(hV, dV, vV, rV)With the real-time global variable gesture from the hand_detection(), we use this variable to command the drone movement, with the SEND_RC_CONTROL command.

| Horizontal | -100 – 0 to move left | 0 – 100 to move right |

| Depth | -100 – 0 to move backward | 0 – 100 to move forward |

| Vertial | -100 – 0 to move down | 0 -100 to move up |

| Rotation | -100 – 0 to rotate anti-clockwsie | 0 – 100 to rotate clockwise |

Unlike the Face Detection project, we used hV to control the Left and Right movement instead of rotation- rV. Oh.. the ‘Left/Right’ in the program is from the user’s point of view, but the drone camera view, is reversed.

That’s all for the project. It is quite straightforward and similar to the Face Detection. Please leave a comment for any inputs and comment. I am now studying how to do swarm flying with 3 Tello Edu, just cleaned up some roadblockers…

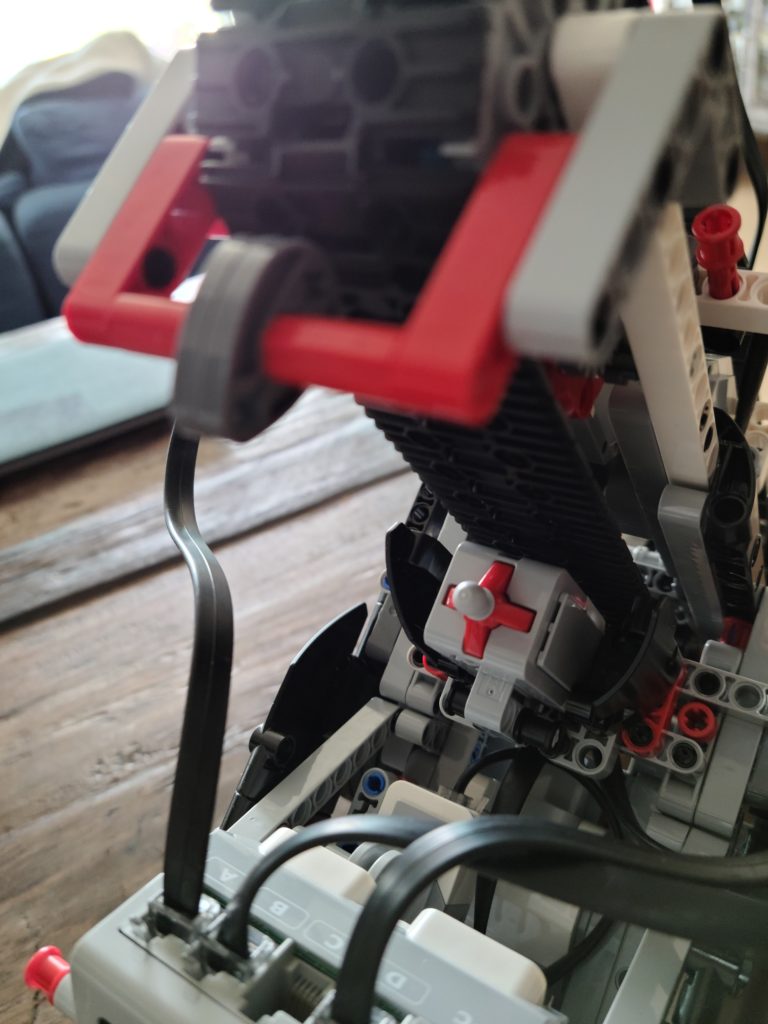

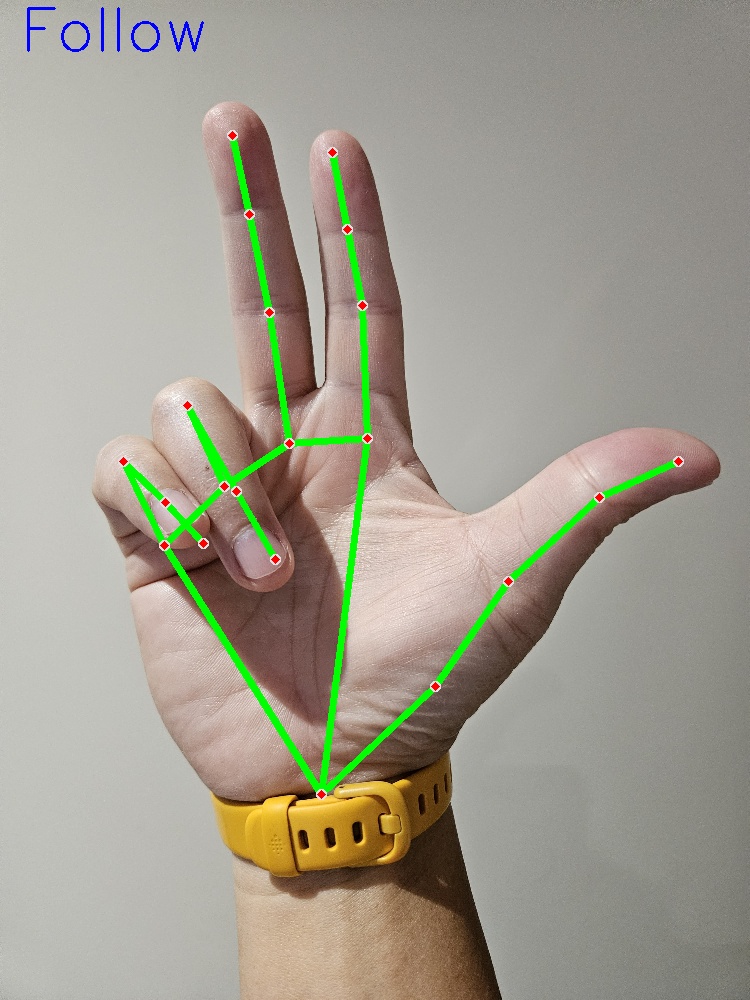

New Command – Follow

Just pop-up that I can add a ‘follow’ command, like what was doing for the face detection and tracking. I believe that we just need to copy & paste the code from the Face Detection project with some modifications.

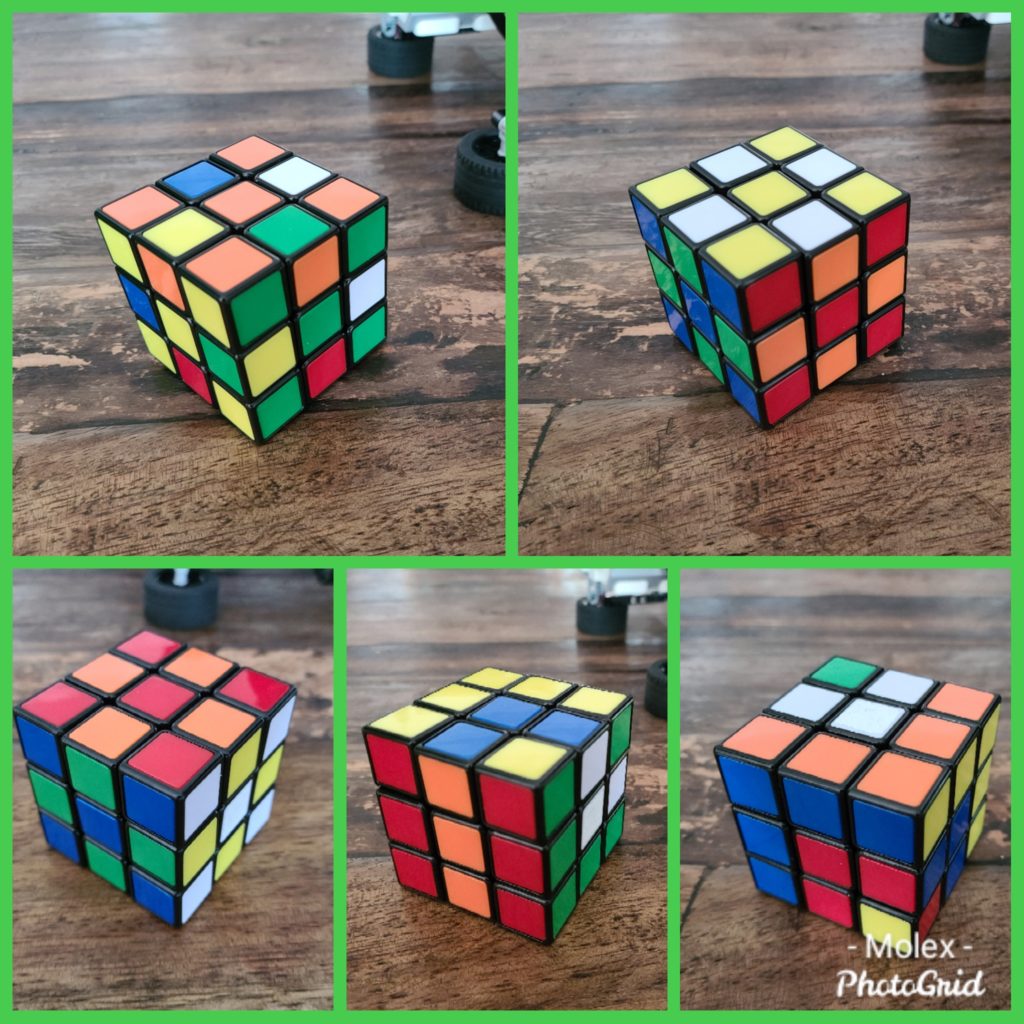

Above is the hand sign for ‘follow’, go ahead to modify the original code with belows,

global gesture

global hand_center # New line for hand follow # New line for hand follow

elif sum(finger_on) == 3 and finger_on[0] == finger_on[1] == finger_on[2] == 1:

gesture = 'Follow'

#Apply Shoelace formula to calculate the palm size

palm_vertexs = [0,1,2,5,9,13,17]

area = 0

for i in palm_vertexs:

x1, y1 = my_hand[i]

x2, y2 = my_hand[(i + 1) % 7]

area += (x1 * y2) - (x2 * y1)

area = 0.5 * abs(area)

hand_center = my_hand[0][0], my_hand[0][1], areagesture = 'Unknown'

hand_center = 480, 360, 28000 # New line for hand follow # New line for hand follow

elif gesture == 'Follow':

x, y, size = hand_center

if x > 480:

rV = -int((x - 480)/4.8)

elif x < 480:

rV = +int((480 - x)/4.8)

else:

rV = 0

if y > 360:

vV = -int((y - 360)/3.6)

elif y < 360:

vV = int((360 - y)/3.6)

else:

vV = 0

if size > 30000:

dV = -15

elif size < 26000:

dV = 15

else:

dV = 0

tello.send_rc_control(hV, dV, vV, rV)

That’s all!

Shoelace Formula

For the above codes, we use landmarks – 0, 1, 2, 5, 9, 13 & 17 to calculate the area of the palm, and this number determines how close the palm is to the drone, we target a range of 26000 – 30000. Then, we command it to fly toward or away from the hand, just similar to the face tracking in the last project.

But, what I want to highlight is the palm area calculation, it led me to learn about the Shoelace Formula, which is a very interesting and powerful formula. I don’t remember that I learned this before, maybe returned this to my teacher already 😎. Anyway, have a look at the below video, it’s worth watching and understanding the Shoelace Formula.